Charles Darwin published On the Origin of Species in 1859. It is somewhat remarkable that some of the theories enunciated in this work can be verified over 150 years later, in human knowledge fields such different from biology as software development.

In order to set the context of the subjects discussed below, and before addressing how are we are affected by Darwin’s statements, let’s take a little trip, visiting some of the most successful Internet companies, whose websites have astronomical numbers of users, millions of pages served per day, and countless amounts of completed transactions.

The voyage aboard the Beagle

|

What is dangerous is not to evolve – Jeff Bezos, CEO & President of Amazon.com |

Darwin embarked on a journey of nearly five years aboard the HMS Beagle, which allowed him to study many animal species and to obtain valuable information to support the theories that later showed in his work. It’s much easier for us to study the species that we are interested in, since we only need a few glimpses to some content that can be found online, publicly available.

We are going to start at Seattle, where a small online bookstore founded in a garage back in the 90’s, has ended up becoming a global bazaar where you can buy anything from the mythical t-shirt of three wolves howling at the full moon (if you did not know about the product, I recommend you to read the customers’ evaluations), to genuine uranium.

Amazon serves 137 million customers per week and has an annual revenue of 34 billion dollars. If all of its active users came together in a country, it would have twice as many people as Canada. You can imagine that, with such amazing figures, introducing new features in the website should be something that they consider thoroughly, and that it would be something that they can’t afford to do so often because of the risk of bugs and unexpected errors showing up, which could lead to huge losses.

Right?

No. Nothing further from the truth. By 2011, Amazon was releasing changes in production every 11.6 seconds on average, involving up to 30,000 servers simultaneously. I’m lacking more recent numbers, but from the evolution of the business, anyone could work out that these figures must only have become even more striking.

|

The biggest risk is not taking any risk… In a world that changing really quickly, the only strategy that is guaranteed to fail is not taking risks. – Mark Zuckerberg, CEO & Chairman of Facebook |

800 miles south, at Menlo Park (California), what began as a social experiment for a group of undergraduates, is serving more than one billion active users, who upload 250 million images a day and view one thousand billion pages per month. The figures are dizzying. The effect is so strong that even some parents have named their children Facebook, literally.

The source code for Facebook is compiled into a binary weighing 1.5 GB and is maintained by more than 500 developers. Stakes are high for each change and deployment. Anyone would expect that any change is made after thorough verification of a strict QA team, and never without the explicit approval from an horde of managers, armed to the teeth with the most inflexible bureaucracy.

Or maybe not?

In fact, whoever imagines it that way, is completely mistaken. Minor changes are released into production at least once a day, and a major version is deployed once a week. Almost all the code is modified directly on the main line; they don’t use branches to protect its integrity. Everyone does testing and can file bugs. Everything is automated to the maximum.

|

In 100 years people will look back on now and say, ‘That was the Internet Age.’ And computers will be seen as a mere ingredient to the Internet Age. – Reed Hastings, CEO of Netflix |

Not far from there, also in California, NetFlix does business from a town called Los Gatos. It is the largest online service for movies and television shows, which are offered by streaming to its subscribers, who sum more than 25 million.

NetFlix services receive frequent attacks that put them at risk, and even lead to failures in specific nodes, making it necessary to perform interventions in order to prevent further problems. So far, it’s not that different from any other big company providing Internet services. What makes it extraordinary in the case of NetFlix, is that most of these attacks are caused… by themselves!

How can it be possible? Have they gone nuts? Are there disgruntled employees trying to sabotage the company from within?

Not really. These attacks are perfectly orchestrated by the Simian Army, the horde of little nuisances developed by Netflix to push the boundaries of their own infrastructure and applications. The Chaos Monkey randomly disables instances to ensure that they can survive this type of failure. The Latency Monkey simulates delays and loss of connectivity. The Conformity Monkey shuts down instances not adhering to a defined set of best practices, immediately and without remorse. And so on… there’s even a Chaos Gorilla, the Chaos Monkey’s bodyguard, who causes an outage across the entire cloud availability zone where it monkeys around. The consequence is that, when these problems occur unexpectedly, all their systems are already prepared to deal with them, since the team has been able to test the procedures, and the code has already been modified to mitigate the consequences. If it sounds interesting for you, you can even take a look at how it is implemented.

|

In this business, by the time you realize you’re in trouble, it’s too late to save yourself. Unless you’re running scared all the time, you’re gone. – Bill Gates, Co-fundador de Microsoft |

Now we return to our first stop, Seattle. Nearby, at Redmond, Team Foundation Service Team serves many other development teams worldwide, providing a tool to support the complete application lifecycle: planning, collaboration, version control, testing, automated builds, etc. With a worldwide-distributed user base, working in all time zones, availability is critical; anyone working in software development knows about the hassle of losing access to version control or not being able to use the build server. The usual approach in these cases, is to focus on a stable set of features, that allows to provide an adequate service to the users, and with minimum changes over time; that way they can guarantee that the availability of the service is not affected by defects introduced by the release of new features.

Do you agree with this approach?

They don’t! The trend since the product launch has been to introduce new features continuously, with a cadence of about three weeks. And we’re not talking about minor or cosmetic changes; those updates have included such important features as automated deployment to Azure, Git integration, or customizable Kanban boards. The few service outages so far have been mostly predictable, and for many of them the user has been alerted so she could be prepared.

Just the same way as Darwin did aboard the Beagle, we could indefinitely continue with our journey in search of peculiar species, in search of many other organizations working in a way that seems to defy common sense and established rules:

- Flickr deploys several times a day, and until recently, they reported on their website on the time of the last deployment, and how many changes were included in it.

- At Spotify, where they maintain over 100 different systems between clients, backend services, components, etc., any of the 250 developers is authorized to modify any of these systems directly if it is needed in order to implement a feature.

- Etsy experiments with new features directly into production, a technique known as A / B testing, to identify those changes that attract more interest from customers.

What conclusions can be drawn at the light of all this information?

Are they all gone completely mad?

Or are we discovering a new way to do work, that breaks with many of the preconceived ideas considered valid so far in software development?

For example, it may seem counter-intuitive to think that the more deployments you do, the less problems you’ll have while deploying. We’ve all had that fateful release on a Friday (you know, if it’s not on Friday, you can’t call it a real deployment…), which forced us to spend all weekend struggling to put the fuc#$@& application up. And the natural reaction is to avoid doing more deployments with all our strength, and postpone it as much as possible, because we know that it will hurt again. After all, if there are many more attempts, it is also much more likely to fail, isn’t it?

Well, usually not. In fact, the effect is that repetition leads to more predictable and controllable deployments, with less uncertainty and much smaller and manageable issues. The underlying philosophy is that if something hurts, rather than avoid it, you should do it more often, and that way you’ll make the pain more bearable. Or putting it another way, it is more acceptable a succession of small pains, than a large, concentrated traumatic pain.

Is it feasible that any single developer has the power to release any changes she deems ready to deliver? Yes, if that change is subjected to a verification process that ensures that it will not break anything once it has been released.

Is it reckless to remove from this verification process a whole chain of bureaucracy, requests, approvals, meetings between departments and a comprehensive control of the process by adequately trained roles?

It is not reckless, as long as all or most of these verifications have been coded and are run in the form of acceptance, regression and smoke automated tests, and unattended deployments, and with the ability of checking the status of all the process in an easy way. Not only it is not reckless, but it will far exceed the reliability of a group of humans doing the same process manually (or even worse, a random variation of it), often in a state of boredom and under a poor concentration. I am not talking about completely eliminating manual steps, which is usually impossible: at least there will be a manual first run of acceptance tests for the user to verify that the development team has understood whatever was intended to be addressed with the particular requirement. Or there might be some special device in our environment for which we can not set up a fully automated deployment. But we always can deal with the rest of our process, and aim to reduce these manual steps as much as possible.

Continuous Delivery is a discipline, a way to work, or a set of patterns and practices, that bears in mind all these factors and takes advantage of them to the maximum. We’re going to rely on techniques such as test automation and deployment, continuous integration, transparency and visibility throughout the entire process, the detailed scrutiny of all dependencies and configuration parameters that affect the delivery of our software, the detection and early addressing of problematic changes, and many others, to enable the possibility that any slight change in our code, committed to version control, is a candidate to be released as soon as possible, and indeed it will, if nothing makes us (automatically) discard it along the way.

It is not only about continuous deployment, as many mistakenly assume, as you can be deploying crap and still do it automatically and continuously. Nor is it just continuous automated testing. It is comprised of these practices but also of many others; all of those which are needed to be confident when assuring that a change is ready for use and the user can benefit from it.

Of course, for this to be successful, close collaboration between whoever is involved is needed, in an environment where barriers and departmental silos have been removed. It is something that movements like DevOps are also addressing.

What is the benefit?

If we stick to the results, the figures from these companies, we could say that the benefit is huge. But in order to avoid falling into the ’Correlation implies Causation’ fallacy, we should be more specific and focus on the context of the software development process.What we find then is:

- A transparent and predictable delivery process. For each change, we always go through the same sequence of steps, and these are automated as far as possible. No surprises.

- Fewer defects in production. The defects appear and are addressed in earlier stages, even automatically. The standardized delivery process prevents any of these defects from ending up in production because of a misunderstanding, or because of the work being done in a different way. It also provides traceability of the origin of these problems.

- Flexibility to undertake changes. Changes are addressed in smaller, more manageable pieces. They are implemented and delivered promptly.

- Immediate and useful feedback about changes, even from the production environment: whether they are running smoothly, how are users accepting them, or the impact on the business.

- Less time required to deploy and release into production, since everything has been automated as much as possible.

- Empowered teams, motivated by the confidence that has been put in them, and the continued feeling of delivering increments of tangible value.

All of this sounds great, but it’s not for me

The adoption of Continuous Delivery practices can be worth it, even if you do not need or do not want to release your software so frequently. Overall, the aforementioned benefits should be the same, so any team willing to improve could consider adopting this approach.

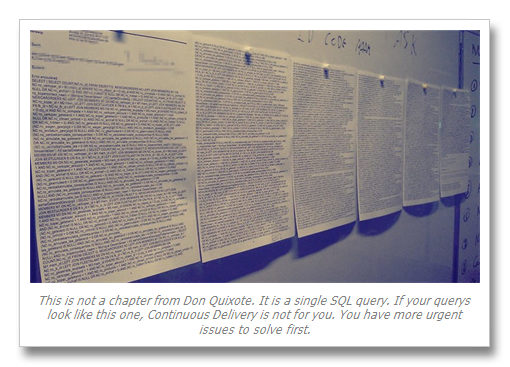

There are very special cases where Continuous Delivery might not be the best option, or even be counterproductive. I, for one, have found very few of them. Most times, these are scenarios where the effort to adopt the practices will not justify the results: legacy systems, outdated technologies, lack of adequate tools to set up the environment, designs not prepared for automation or testing, etc.

But the real problem, which unfortunately appears quite often, comes when the team or the organization itself does not adopt an open attitude to change and improvements. We could say that deep inside, even unconsciously, they do not want a transparent delivery process, they don’t need fewer defects in production, or they don’t want flexibility to cope with changes. Externally this manifests itself as the decision of not to invest in the necessary improvements. The most common example is the typical argument of the kind ‘our case is very unusual,’ ‘our system is very complex,’ ‘we deal with a very delicate business,’ ‘our users are very special,’ ‘my boss would never let me,’ ‘my mom won’t let me’ or ‘insert your favorite excuse here.’ Among these, it is quite frequent to hear ‘we can’t afford to invest in it,’ when in fact, as we will see in a moment, is thatvwhat for sure you can’t afford is not to invest in it.

Is your system bigger than Team Foundation Service?

Do you deal with a more complex business than Amazon?

Are your users more demanding than those from Facebook? Do they have more special requirements?

Do you have to serve a bigger volume of data than NetFlix?

If you’re among the vast majority, those who would respond negatively to these questions, chances are that you are just feeling lazy about addressing the transition to a Continuous Delivery model. In that case, my advice is to be careful, because your organization can suffer the same fate as the dodo or the thylacine.

Natural selection

We had left Darwin aboard the Beagle, sailing the seven seas in search of unique species. At the end of his voyage, he felt perplexed about the variety of wildlife and fossils he had found, so he began an investigation that led him to enunciate the theory of natural selection in his book «On the Origin of Species».

|

It is not the strongest of the species that survives, nor the most intelligent that survives. It is the one that is the most adaptable to change. In the long history of humankind (and animal kind, too) those who learned to collaborate and improvise most effectively have prevailed.

Charles Darwin, English naturalist |

Natural selection states that those members of a population which have the characteristics that are better adapted to their environment, are more likely to survive. What about the others? Well, sooner or later they’ll end up disappearing.

It is a law that applies to living organisms, but if you think about it for a moment, what is an organization but a big living organism? Of course natural selection applies to companies and organizations, as any list of extinct companies demonstrates.

In the constantly changing environment in which most businesses operate, it is no longer enough to offer nice and cheap products. You have to deliver them sooner, and evolve them quickly in response to the demands of the users. Keeping track of metrics such as team velocity or defect rate is insufficient. The metrics that are making a difference between those who succeed and those who get stuck on the way are others:

- Cycle time: the time elapsed on average since we start working on a feature, until we have it released in production.

- Mean time to failure (MTTF): how long it takes, on average, for my system to suffer from a big issue or an outage.

- Mean time to recover (MTTR): how long it takes, on average, for my system to be fully functional again after a big problem or an outage.

Natural selection will favor those who are able to hold these values as small as possible, and this is exactly one of the areas where Continuous Delivery can help better.

OK, I don’t want to become extinct. Where should I start?

Throughout this article we have focused on showing the benefits of Continuous Delivery and what could happen if we ignore it. But we have not covered in any depth how to implement it, and given the large number of patterns and practices to consider, it can end up being a process that is far from trivial.

Undoubtedly, there is a cultural side, which will demand from us to work within our organization to remove barriers and silos, and improve collaboration as much as possible.

There are lots of available resources that can help us to get started, but without any doubt the most valuable one is the excellent book by Jez Humble and David Farley, Continuous Delivery.

If your environment is based on Microsoft technologies, fortunately we have great tools available that can support most of the aforementioned practices. Visual Studio and Team Foundation Server, and related tools, will help us to implement the whole Continuous Delivery ‘pipeline’, from automating all kinds of testing and deployment, to more specific topics such as static code analysis or continuous integration. It is true that these tools require customization work and some tweaking in order to be suited for the model we are proposing, but here at Plain Concepts we can help you to prepare the environment that best suits your project; it’s something that we’ve done before for many organizations, and seems that still none of them have become extinct.

Also, if you want to get an overall idea about how Team Foundation Server can be customized in order to support Continuous Delivery, you can have a look at my presentation on this topic at ALM Summit 3.

And if you can afford to wait a bit longer, right now I’m working with the Microsoft Patterns & Practices team in a new book about the subject that will be available in a few months, where we will cover in depth whatever is needed to put these ideas into practice in an effective way. More news about it very soon!