Some time back while preparing the Global Azure Bootcamp Science Lab, I face the lack of some functions that are not available when authoring Azure Resource Manager templates. When creating some type of resources such as Batch jobs or RBAC related resources, you need to pass a GUID (Universally unique identifier) but there is no function to create them inside the template, so you need to pass them as template parameters making the result awful.

Some time back while preparing the Global Azure Bootcamp Science Lab, I face the lack of some functions that are not available when authoring Azure Resource Manager templates. When creating some type of resources such as Batch jobs or RBAC related resources, you need to pass a GUID (Universally unique identifier) but there is no function to create them inside the template, so you need to pass them as template parameters making the result awful.

There is already a Feedback item describing the issue (please vote on https://feedback.azure.com/forums/281804-azure-resource-manager/suggestions/13067952-provide-guid-function-in-azure-resource-manager-te) and there are other similar issues when you need to use more sophisticated functions such as date related values.

When trying to provide a workaround, I finally found that could be solved with nested templates, so I started by building a simple ARM Guid template that could be referenced on your primary one. You can check that repo here https://github.com/davidjrh/azurerm-newguid

But when testing the GAB lab with millions of guids, found that from time to time that template was generating duplicated guids, so finally ended by implementing the lab ARM Guid templates by using a WebAPI. You can check the how the guid template was being used at https://github.com/intelequia/GAB2017ScienceLab/blob/master/lab/assets/GABClient.json#L100 (check lines 100 and 398), and the template API implementation available at https://github.com/intelequia/GAB2017ScienceLab/blob/master/src/GABBatchServer/src/GAB.BatchServer.API/Controllers/TemplatesController.cs

Building a GUID function for ARM templates with Azure Functions

Revisiting this today, I thought that could be more cost effective of having that dynamically generated template by using an Azure Function. Here is the step by step so you can deploy your own, and increase your ARM functions arsenal with the same approach.

Remember that the basic idea is to reference this function in your deployment, that will generate a GUID for later usage on your template.

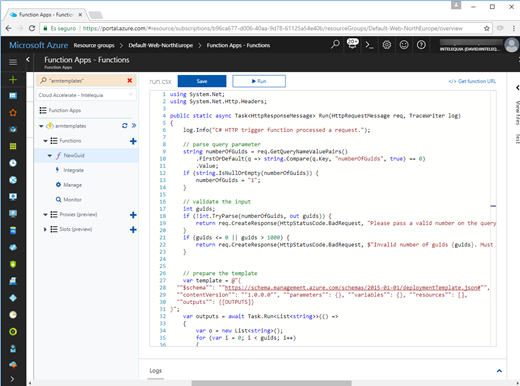

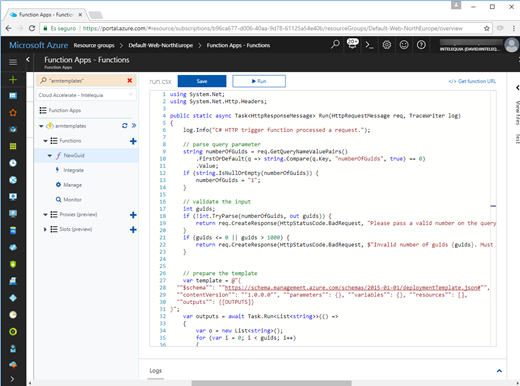

- Create an Azure Function App through the Azure Portal.

- Add a function triggered by a HTTP request in C#

- Copy and paste the following code in the function body

using System.Net;

using System.Net.Http.Headers;

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log)

{

log.Info(«C# HTTP trigger function processed a request.»);

// parse query parameter

string numberOfGuids = req.GetQueryNameValuePairs()

.FirstOrDefault(q => string.Compare(q.Key, «numberOfGuids», true) == 0)

.Value;

if (string.IsNullOrEmpty(numberOfGuids)) {

numberOfGuids = «1»;

}

// validate the input

int guids;

if (!int.TryParse(numberOfGuids, out guids)) {

return req.CreateResponse(HttpStatusCode.BadRequest, «Please pass a valid number on the query parameter ‘numberOfGuids'»);

}

if (guids <= 0 || guids > 1000) {

return req.CreateResponse(HttpStatusCode.BadRequest, $»Invalid number of guids {guids}. Must be a number between 1 and 1000″);

}

// prepare the template

var template = @»{

«»$schema»»: «»https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#»»,

«»contentVersion»»: «»1.0.0.0″», «»parameters»»: {}, «»variables»»: {}, «»resources»»: [],

«»outputs»»: {[OUTPUTS]}

}»;

var outputs = await Task.Run<List<string>>(() =>

{

var o = new List<string>();

for (var i = 0; i < guids; i++)

{

o.Add(@»»»guid» + i + @»»»: { «»type»»: «»string»», «»value»»: «»» + Guid.NewGuid() + @»»» }»);

}

return o;

});

var result = template.Replace(«[OUTPUTS]», string.Join(«,», outputs.ToArray()));

// return the response

return new HttpResponseMessage() {

Content = new System.Net.Http.StringContent(

result,

System.Text.Encoding.UTF8,

«application/json»

)

};

}

Testing the function

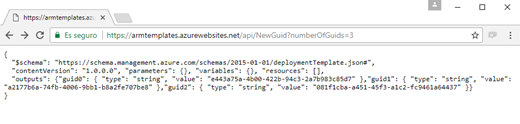

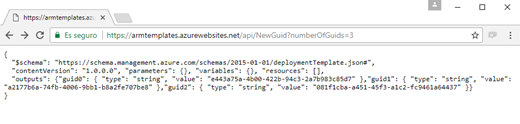

Once you have saved the function, you can test it by doing a webrequest. In my case, when I call the URL https://armtemplates.azurewebsites.net/api/NewGuid?numberOfGuids=3 I get this answer:

You can pass the number of guids to generate as parameter.

You can pass the number of guids to generate as parameter.

Consuming the GUID template by using a nested template

As I documented on the initial GitHub repo, you can just follow these examples to consume then in different ways:

Example 1. Getting a GUID and using it later

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {},

"variables": {},

"resources": [

{

"apiVersion": "2015-01-01",

"name": "MyGuid",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "incremental",

"templateLink": {

"uri": "https://armtemplates.azurewebsites.net/api/NewGuid",

"contentVersion": "1.0.0.0"

}

}

}

],

"outputs": {

"result": {

"type": "string",

"value": "[reference('MyGuid').outputs.guid0.value]"

}

}

}

Example 2. Getting 2 guids and using them later

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {},

"variables": {},

"resources": [

{

"apiVersion": "2015-01-01",

"name": "MyGuids",

"type": "Microsoft.Resources/deployments",

"properties": {

"mode": "incremental",

"templateLink": {

"uri": https://armtemplates.azurewebsites.net/api/NewGuid?numberOfGuids=2,

"contentVersion": "1.0.0.0"

}

}

}

],

"outputs": {

"result0": {

"type": "string",

"value": "[reference('MyGuids').outputs.guid0.value]"

},

"result1": {

"type": "string",

"value": "[reference('MyGuids').outputs.guid1.value]"

}

}

}

If you still have any doubt on how to consume the outputs, check the GAB Science Lab template on line 398 https://github.com/intelequia/GAB2017ScienceLab/blob/master/lab/assets/GABClient.json#L398

Hope this helps! Un saludo y happy coding!

El próximo 10 de mayo de 2018, en el Aula 1.4 de la Escuela Superior de Ingeniería y Tecnología, tenemos la suerte de tener al equipo de Educación de Microsoft España en Tenerife para presentarnos una jornada sobre Microsoft Azure en el ámbito de la Educación y la Investigación.

El próximo 10 de mayo de 2018, en el Aula 1.4 de la Escuela Superior de Ingeniería y Tecnología, tenemos la suerte de tener al equipo de Educación de Microsoft España en Tenerife para presentarnos una jornada sobre Microsoft Azure en el ámbito de la Educación y la Investigación.