In the last days I have had several conversations around YAML Azure pipelines, some people asking me whya they should go from old “moving boxes” mode to YAML Azure Pipelines. So here goes my opinion on this, disclaimer, this is just and opinion post, don’t expect here instructions, but just my one (not event two) cent.

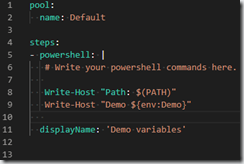

What are YAML pipelines? this is the new model for build pipelines, instead of using the visual designer, we go directly with configuration as code, using YAML language to define our build process. YAML has been around for some time, it is widely used for Docker, Kubernetes, and other build systems like Appveyor, so let’s just say it is an standarized way to define “things” in code. So it seems like the natural evolution to go for our builds, as more and more people and companies are going into this, making it a “natural” language to express. Is it difficult? for sure, it has it’s problems, usually around the spaces and tabbing issues, but more and more tools (you have plugins for VS Code and other tools in example) comes in our help.

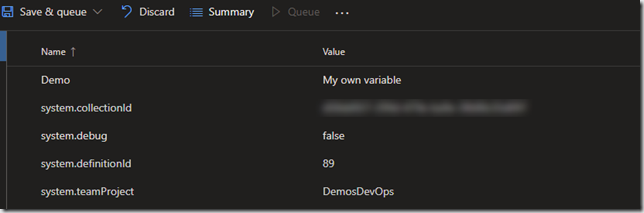

Why YAML pipelines? Configuration as code. Our code evolves, and our build configuration usually evolves, not at the same pace for sure, but it evolves, so we must keep configuration tied to code, and which method could be better than represnting oour configuration as code?. So we end up storing our way to build right next to the code (another opinion: keep your code in the same repo as your YAML builds). So when we make changes to our structure code, adding or removing projects, new ways to build, the way to build is right with the code, in the case we need to build and older version, we will have the exact build configuration right with it, therefore we won’t have to make fancy things like recover and old version of the build from the definion history like with the “boxes” approach.

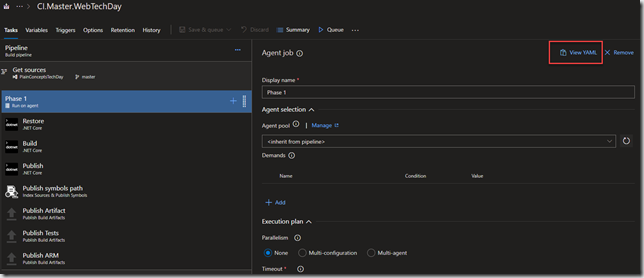

Is it ready for use? Yet I can agree sometimes the documentaion is not up to date, we should really go with the YAML builds, it is easy to start with. In any build definition we can get the YAML, clicking this button:

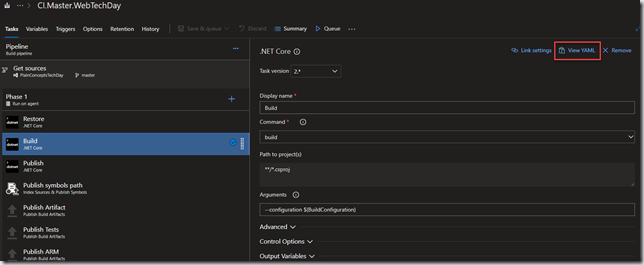

And also, for each of the tasks we have in and old model definition, we can also export YAML, so we can start learning from here:

I’m not saying you just go right away and modify all your existing builds and move them yo YAML, but next build definition you create, go with YAML and start using configuration as code.