In the last weeks I have had several interesting conversations on feature flags, so I just wanted to do a little brain dump on my ideas/views on feature flags, this are my particular views at this moment, they can change :). Also, at this moment I have not heavly used them in production, I have tried them in production, but just in little scenarios, this is what I have learned and discussed with people who have been using the a lot.

First things first, for me feature flags is all about experimentation and testing hypothesis. I just use feature flags which are short lived so the code related to the flag itself is not so long inside the code, usually they are “if’s” or lambdas, so once tested the hypothesis I would clean them up, if hte hypothesis for a particular feature works, the rest of the code remains, if not, the rest of the code is cleaned. So when using a feature flag, it is important to track its life cycle so no “dead code” is left behind.

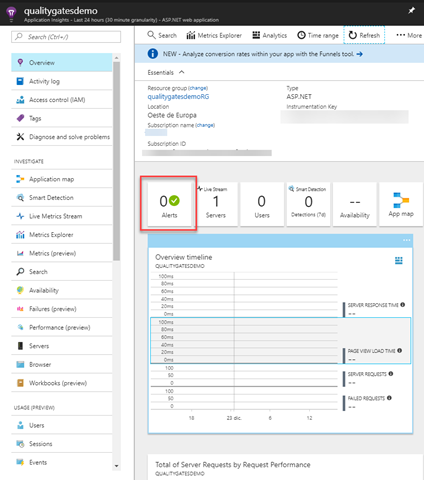

Next thing is almost obvious, if we are testing an experiment or a hypothesis, how and when we do know it worked or not?. We must work with business on this particular decisions and ask the stakeholders and team that question, this will lead us to something which is crucial, which metrics do we need to track for the feature we are experimenting, so be sure to track the usage and provide good metrics and a way to check them so you can take a decision about the feature. Probably taking the decision on which metrics and when to go ahead with the feature, it is harder than the implementation.

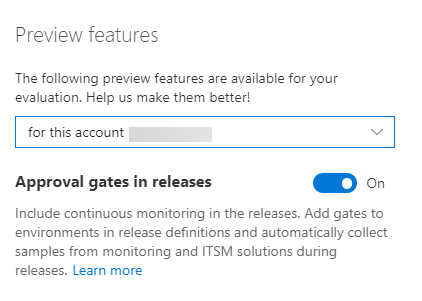

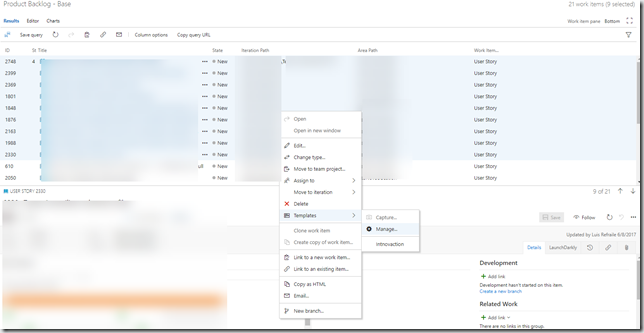

How we will handle the activation/deactivation? Some features can be even enabled/disabled by the users itselves, but not always, so we will have to decide how to do it, maybe we will enable them based on percentages, geolocation, or even automatically based on metrics, so when we go ahead with a feature flags system, be sure to know how are you going to be activating them during it’s life. For me this goes tight with the deployment of our application, so we must support enabling/disabling them not only via a UI or configuration files, but from outside systems which can interact with them and the system decided for rolling-out them with for example APIs or similar mechanism.

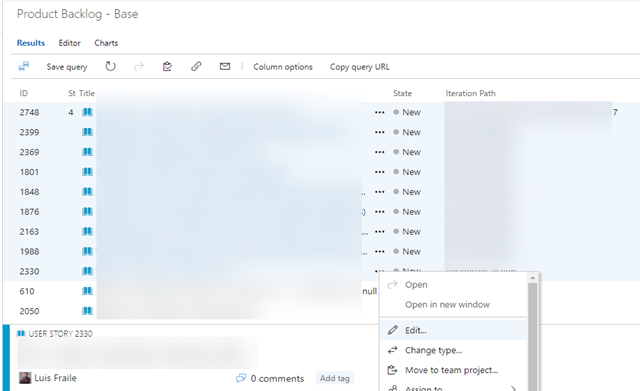

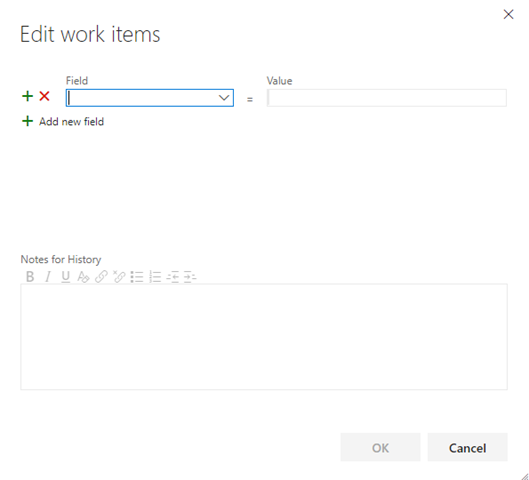

Last but not least, visibility, I started saying I prefer short lived flags, so how many flags we have in our code? which is the state (enabled/disabled) of them for our user base? keep in mind to keep them tracked in any mechanism you can provide so no “dead code” is left behind.

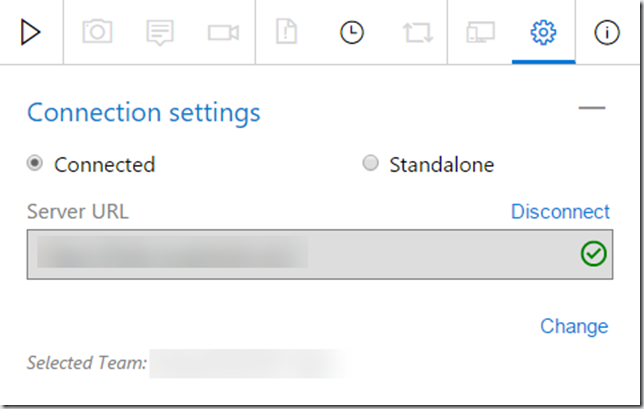

Just for you to check out a couple of feature flags systems, if you are on .NET Core you have this library, created by Xabaril Team, which is interesting and I know they are working on improve it. Also if you are looking for commercial products, probably Launch Darkly is one of the feature flags systems which is growing fast in use and capabilities.

Feel free to comment on this via comments or just reach me out in twitter.